To view previous Objective click, HERE.

Objective Topics:

- Describe the benefits of SIOC

- Enable and configure SIOC

- Configure/Manage SIOC

- Monitor SIOC

- Differentiate between SIOC and Dynamic Queue Depth Throttling features

- Given a scenario, determine a proper use case for SIOC

- Compare and contrast the effects of I/O contention in environments with and without SIOC

Describe the benefits of SIOC

Storage I/O control its like QOS for your datastore, you can control the amount of I/O per VM during period of I/O congestion.When you enable SIOC the ESXi host start to monitor the latency and when a latency threshold reached SIOC allocate resource in proportion of their shares.

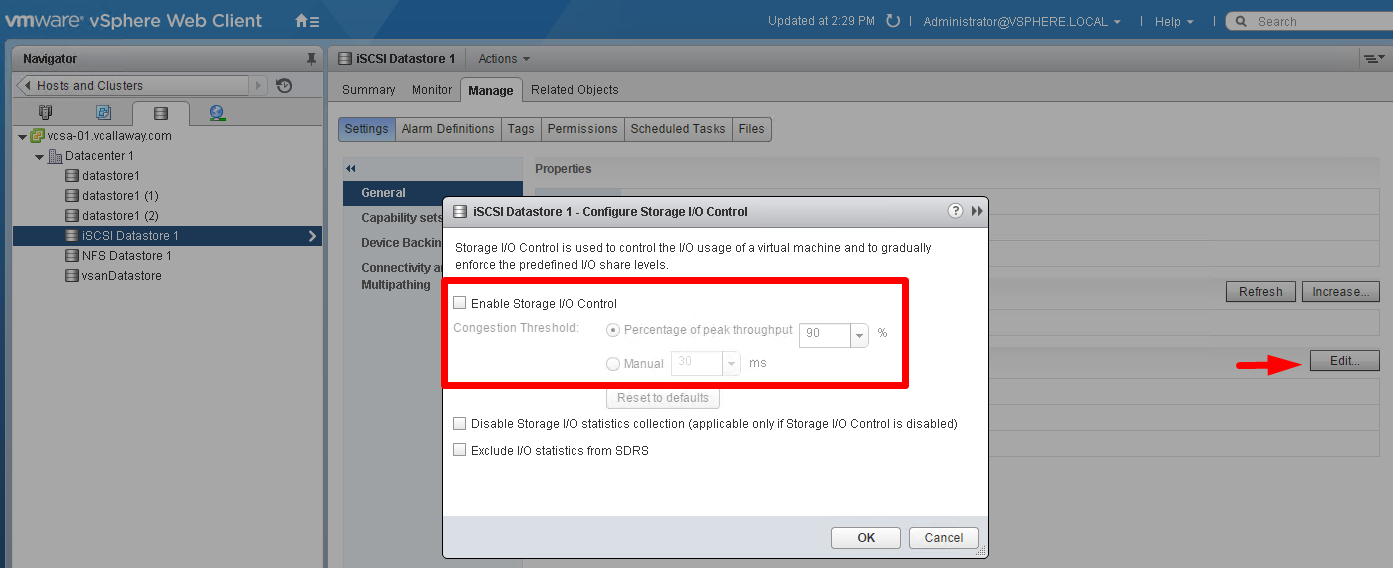

Enable and configure SIOC

Mine is greyed out because my VM is not supported for SIOC. But this is how you would still enable SIOC.

Determine a proper use case for SIOC

For quite a while, we have been able to give bandwidth fairness to VMs running on the same host via the SFQ, the start-time fair queuing scheduler. This scheduler ensures share-based allocation of I/O resources between VMs on a per host basis. It is when we have VMs accessing the same datastore from different hosts that we’ve had to implement a distributed I/O scheduler. This is called PARDA, the Proportional Allocation of Resources for Distributed Storage Access. PARDA carves out the array queue amongst all the Virtual Machines which are sending I/O to the datastore on the array & adjusts the per host per datastore queue size depending on the sum of the per Virtual Machine shares on the host.

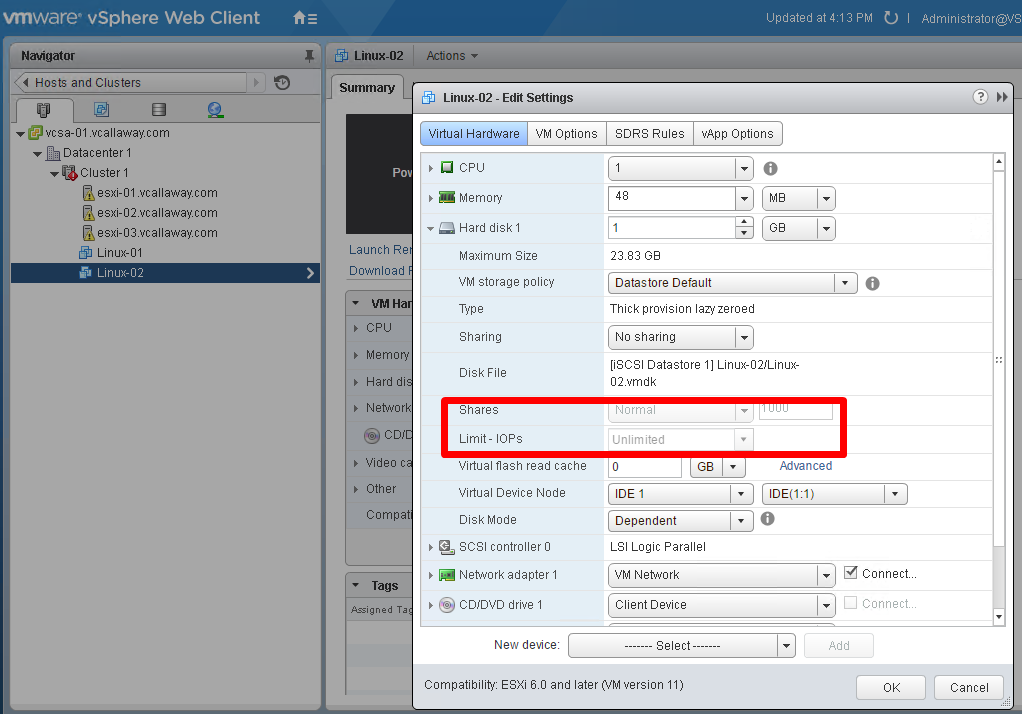

If SIOC is enabled on the datastore, and the latency threshold on the datastore is surpassed because of the amount of disk I/O that the VMs are generating on the datastore, the I/O bandwidth allocated to the VMs sharing the datastores will be adjusted according to the share values assigned to the VMs.

Source: VMware Blog Entry

To move onto Objective 4.1 click, HERE