Objective 2.1 Topics:

- Determine Use Cases for Raw Device Mapping (RDM)

- Apply storage presentation charactoristics according to a deployment plan

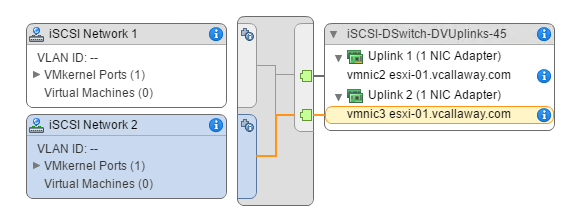

- VMFS Re-signaturing

- LUN Masking using PSA-related Commands

- Create/Configure multiple VMkernals for use with iSCSI port bindings

- Configure / Manage vSphere Flash Read Cache

Determine Use Cases for Raw Device Mapping (RDM)

Virtual RDM vs. Physical RDM

Virtual Mode:

The VMkernel sends only READ and WRITE to the mapped device. The mapped device appears to the guest operating system exactly the same as a virtual disk file in a VMFS volume. The real hardware characteristics are hidden. If you are using a raw disk in virtual mode, you can realize the benefits of VMFS such as advanced file locking for data protection and snapshots for streamlining development processes. Virtual mode is also more portable across storage hardware than physical mode, presenting the same behavior as a virtual disk file.

Physical Mode:

The VMkernel passes all SCSI commands to the device, with one exception: the REPORT LUNs command is virtualized so that the VMkernel can isolate the LUN to the owning virtual machine. Otherwise, all physical characteristics of the underlying hardware are exposed. Physical mode is useful to run SAN management agents or other SCSI target-based software in the virtual machine. Physical mode also allows virtual-to-physical clustering for cost-effective high availability.

Use cases:

Microsoft Clustering: If you have a Microsoft cluster that spans across multiple hosts that required shared disks the disks should be presented to the guest nodes in physical RDM mode rather than directly to a VMDK/virtual disk. That will not work, due to VMware’s locking mechanism on the virtual disks.

Application SAN Awareness: Some virtual machines have SAN aware applications installed that may integrate directly into the SAN software and hardware. In order for these applications to work, the physical SAN may need direct access to manage the disk for snapshots, backups, replication, deduplication. Basically whatever the SAN feature set supports.

Apply Storage Presentation Characteristics according to a deployment plan:

VMFS Re-Signaturing

When re-signaturing datastores consider the following:

- It’s irreversible since it overwrites the original VMFS UUID.

- The VMFS datastore is no longer treated as a LUN copy, but appears as an independent datastore.

- Spanned datastore can be resignatured only if all it’s extents are online.

- Re-signaturing is crash and fault tolerant. Meaning, if interrupted it can resume.

LUN Masking Using PSA-related Commands

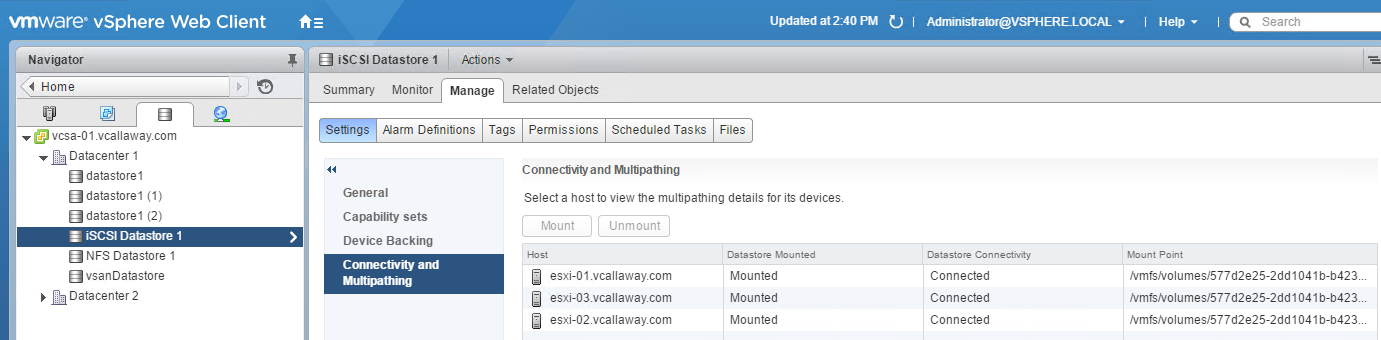

Create / Configure Multiple VMkernals for use with iSCSI Port Bindings:

I’ve already have this done from going through my VCP6 study guide. But this is what VMware is looking for I would assume.

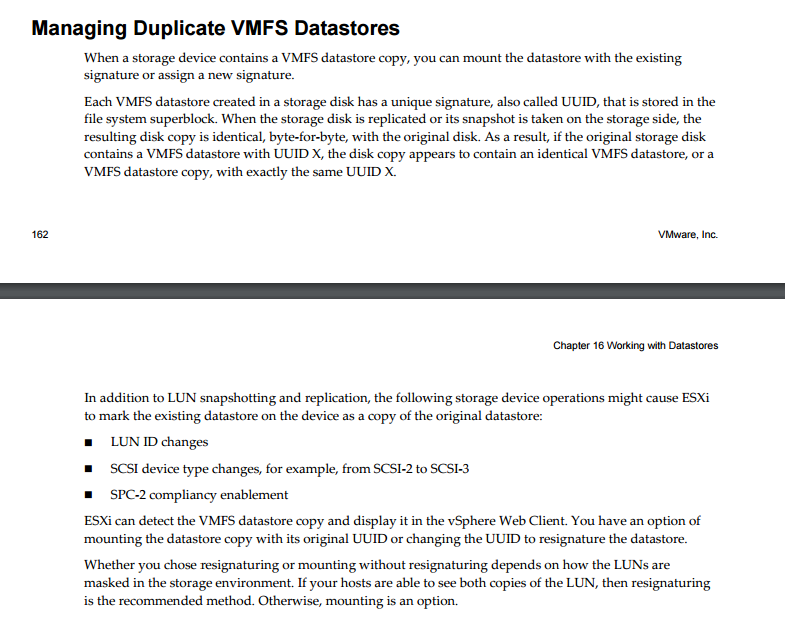

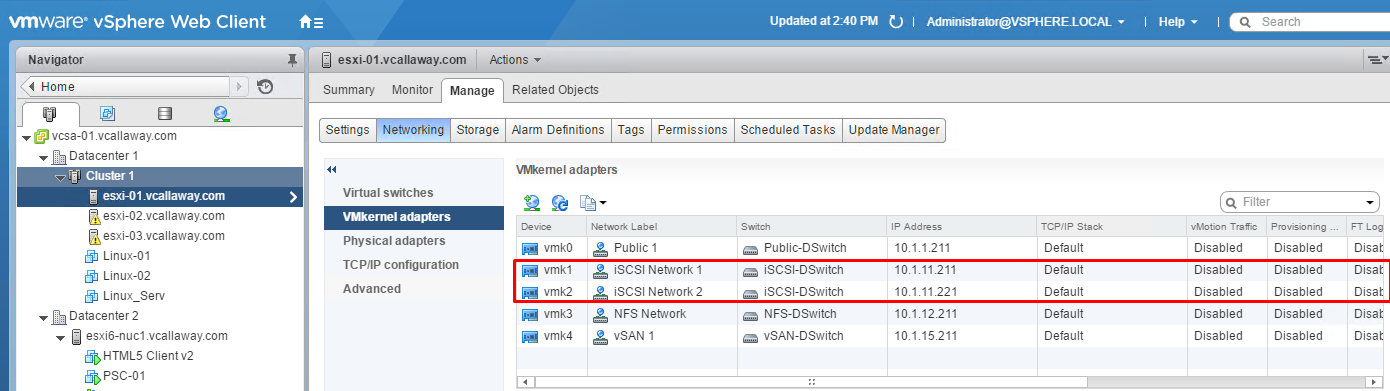

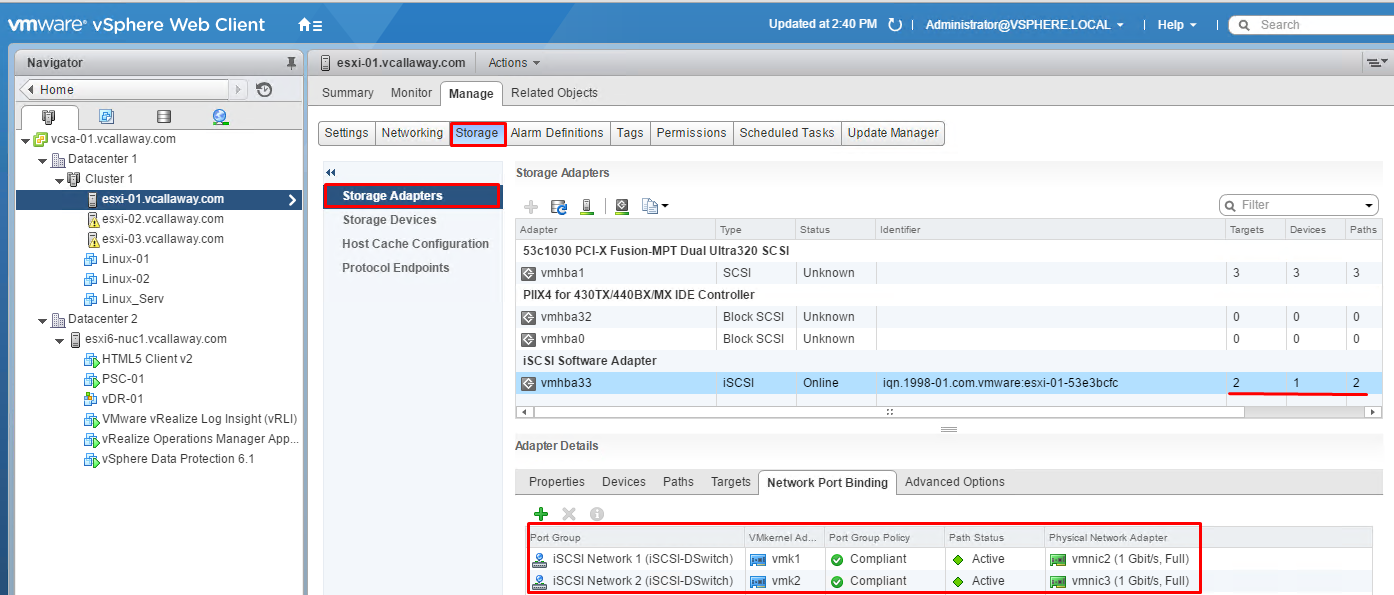

I have 2 vmkernal ports on my DVS switch.

They are added and compliant and both paths are active.

Configure / Manage vSphere Flash Read Cache

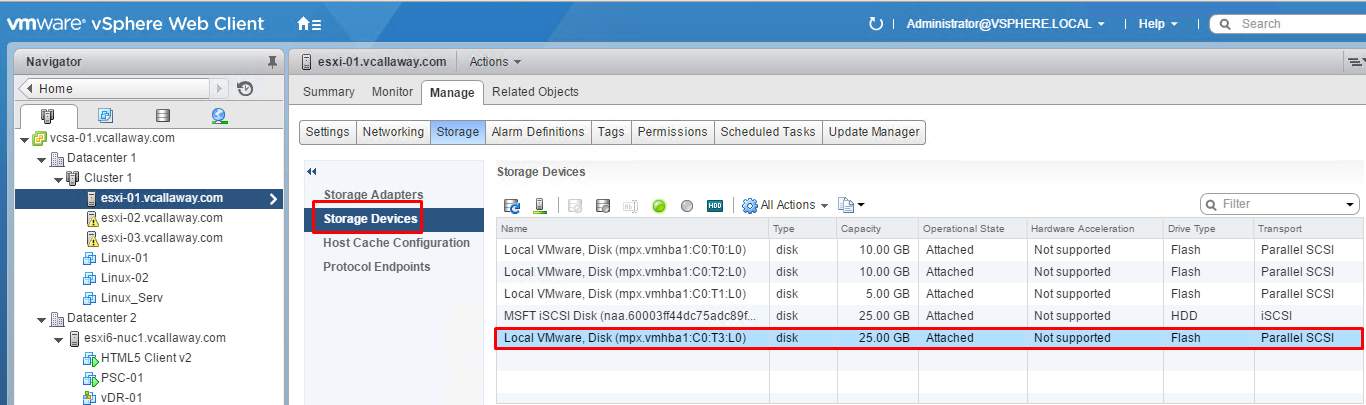

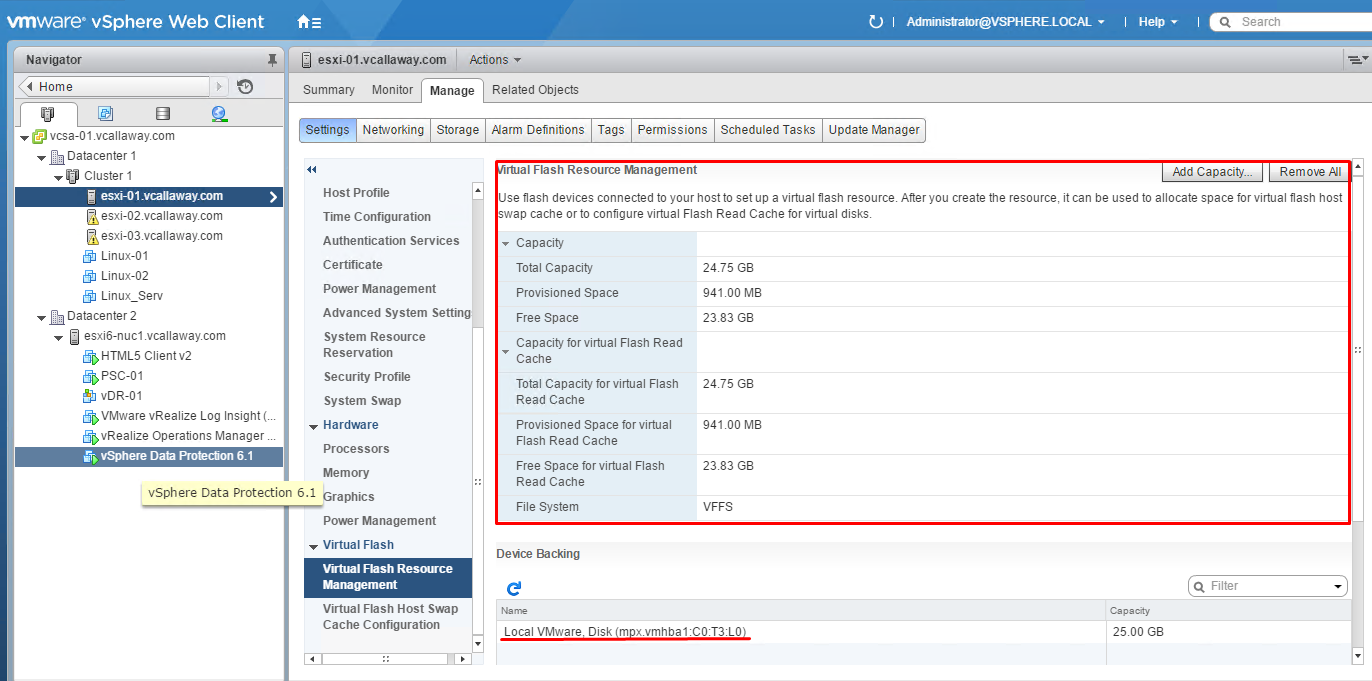

For my hosts, I’m still using the nested solution. I’ve added additional virtual disks to all 3 of the hosts and have re-scanned to have the newly created disks to show up. They are also all flash (SSD), so we will not have to mark then as flash, but you can if you are using magnetic disks for this study guide.

I’ve added a 25GB disk to each of my hosts. As as above.

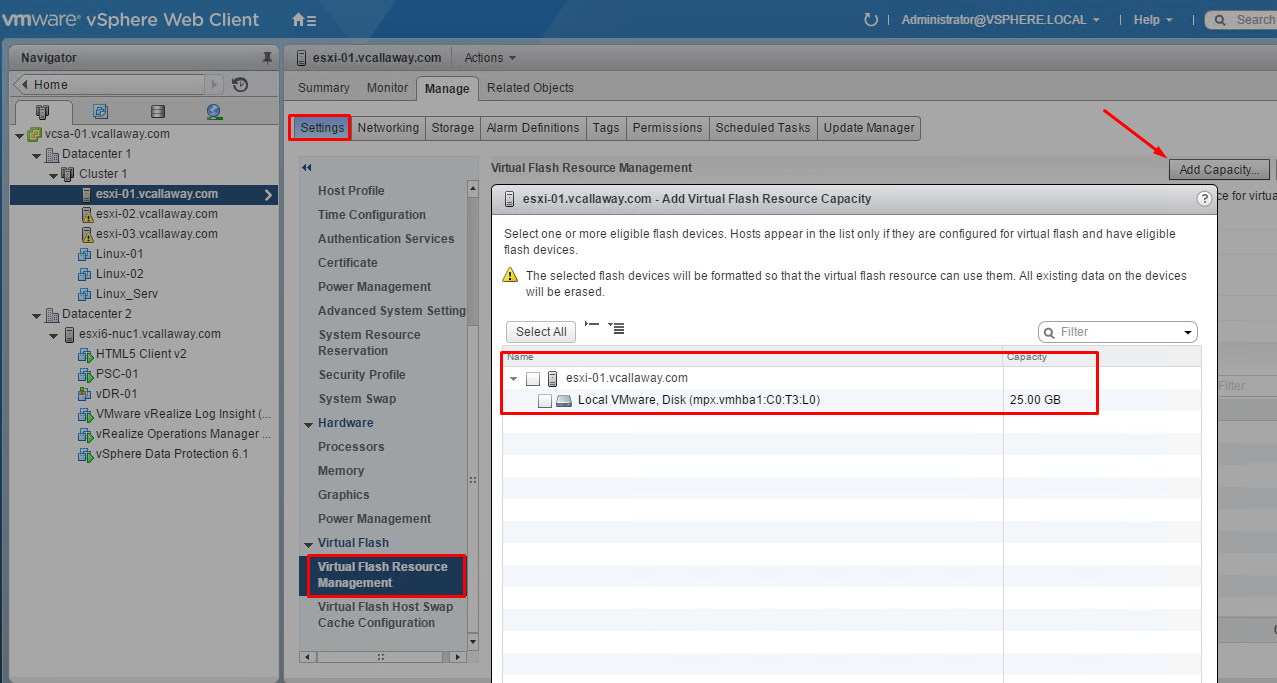

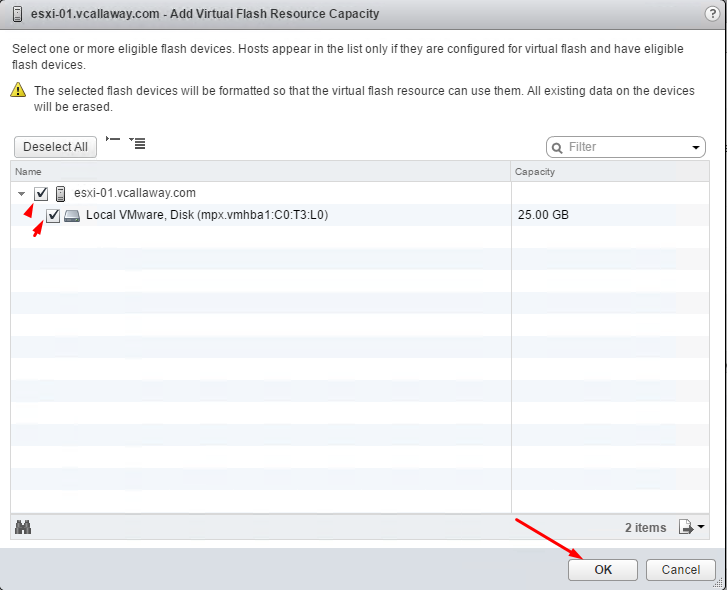

To add it as flash cache, we’ll need to do the following on each host.

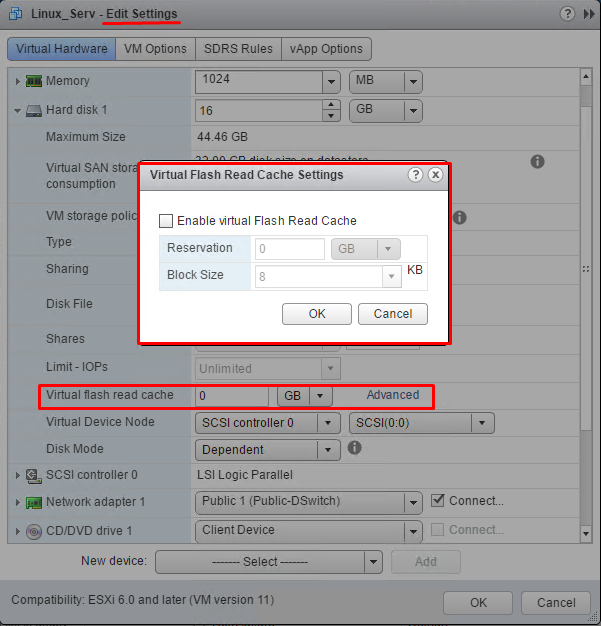

Now, the whole point of adding the flash cache to the host was to be able to assign it to VM’s. Let’s do that.

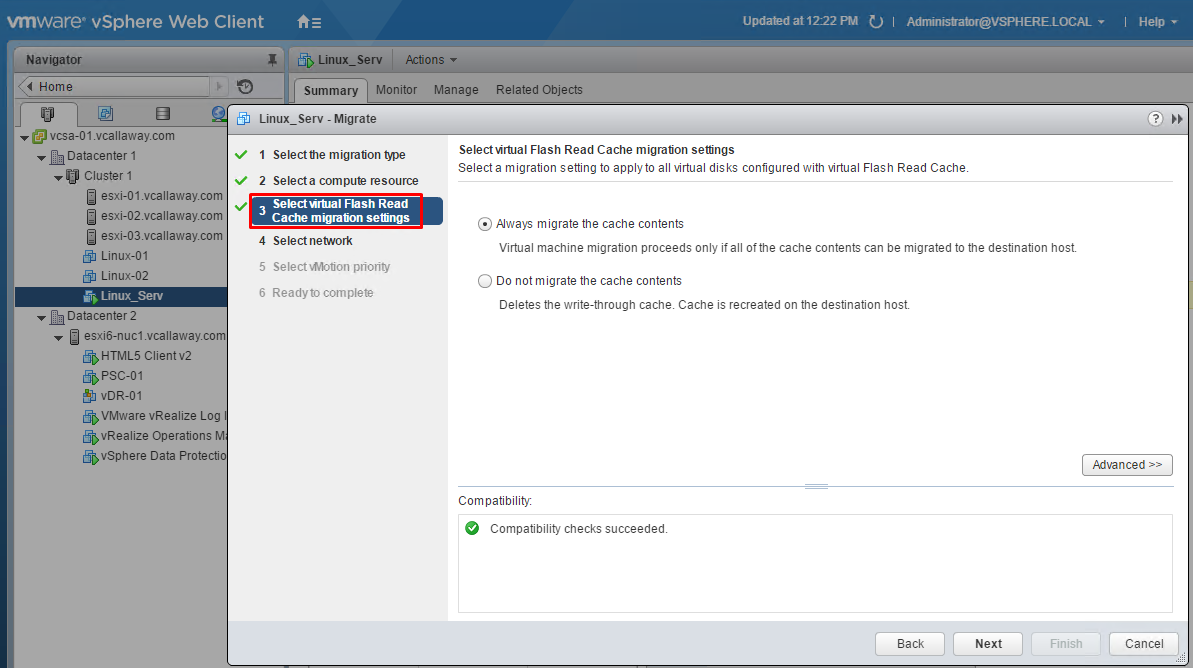

Now, if we try and migrate the virtual machine’s computing resources to another host we have an extra option to be able to migrate our flash cache contents as well.