Objective 3.3 Topics:

- Configure appropriate NIC teaming failover type and related physical network settings

- Determine and Apply Failover settings according to a Deployment Plan

- Configure and Manage Network I/O control 3

- Determine and Configure vDS Port Binding Settings according to a Deployment Plan

Configure Appropriate NIC Teaming Failover type and related Physical Network Settings

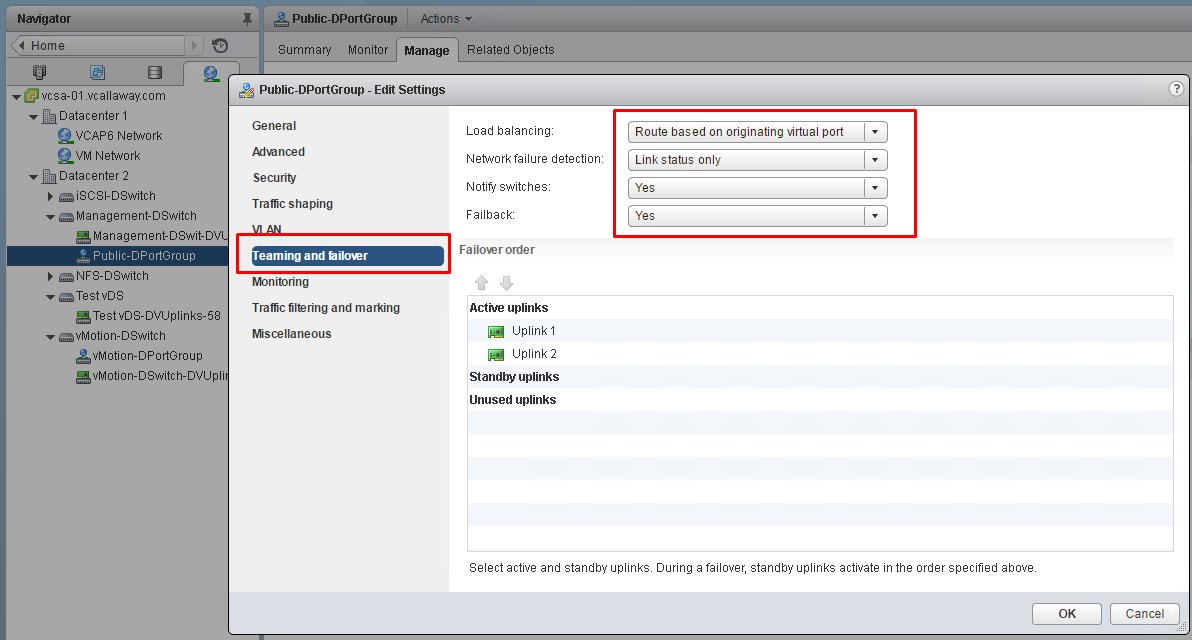

Teaming and Failover Policy

NIC teaming lets you increase the network capacity of a virtual switch by including two or more physical NICs in a team.

NIC Teaming Policy

You can use NIC teaming to connect a virtual switch to multiple physical NICs on a host to increase the network bandwidth of the switch and to provide redundancy

Load Balancing Policy

The Load Balancing policy determines how network traffic is distributed between the network adapters in a NIC team. vSphere virtual switches load balance only the outgoing traffic. Incoming traffic is controlled by the load balancing policy on the physical switch.

Network Failure Detection Policy

We have 2 methods of detection.

Link Status Only – Relies on the status of the link only. Detects failures such as cables being removed, physical switch power failures.

Beacon Probing – Sends out and listens for Ethernet broadcast frames, or beacon probes, that physical NICs send to detect link failure in all physical NICs in a team. ESXi hosts send beacon packets every second. Beacon probing is most useful for failures that don’t include link down status. Beacon probing is best used with 3 or more NICs in a team because ESXi can detect failures of a single adapter.

Failback Policy

By default, a failback policy is enabled on a NIC team. If a failed physical NIC returns online, the virtual switch sets the NIC back to active by replacing the standby NIC that took over its slot.

Notify Switches Policy

By using the notify switches policy, you can determine how the ESXi host communicates failover events. When a physical NIC connects to the virtual switch or when traffic is rerouted to a different physical NIC in the team, the virtual switch sends notifications over the network to update the lookup tables on physical switches.

Configure and Manage Network I/O Control 3

What is Network I/O Control?

Network I/O Control version 3 introduces a mechanism to reserve bandwidth for system traffic based on the capacity of the physical adapters on a host. It enables fine-grained resource control at the VM network adapter level similar to the model that you use for allocating CPU and memory resources.

What can we do with Network I/O Control?

- Apply bandwidth reservations (per services, IP stacks, VMs)

- Guarantee bandwidth to Virtual Machines

Notes:

SR-IOV is not available for VM’s configured with Network I/O Control 3

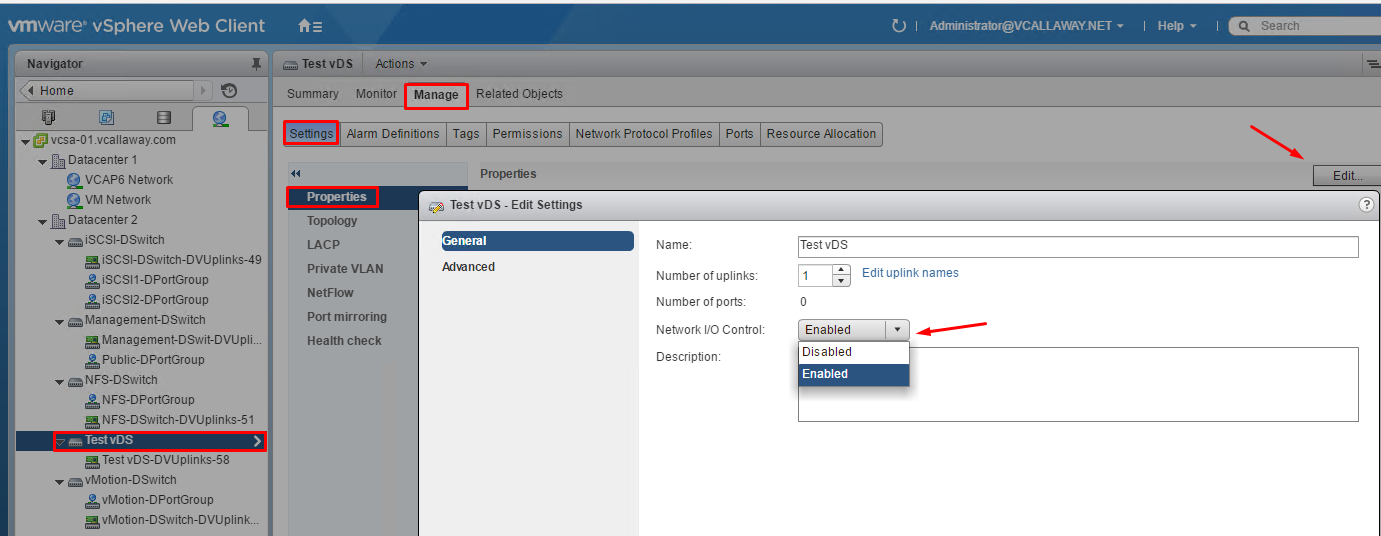

Enabled NIOC on a Distributed Switch

Enable NIOC resource mgmt to guarantee minimum bandwith to system traffic for vSphere features and to the virtual machines.

Enable NIOC

System Traffic Types:

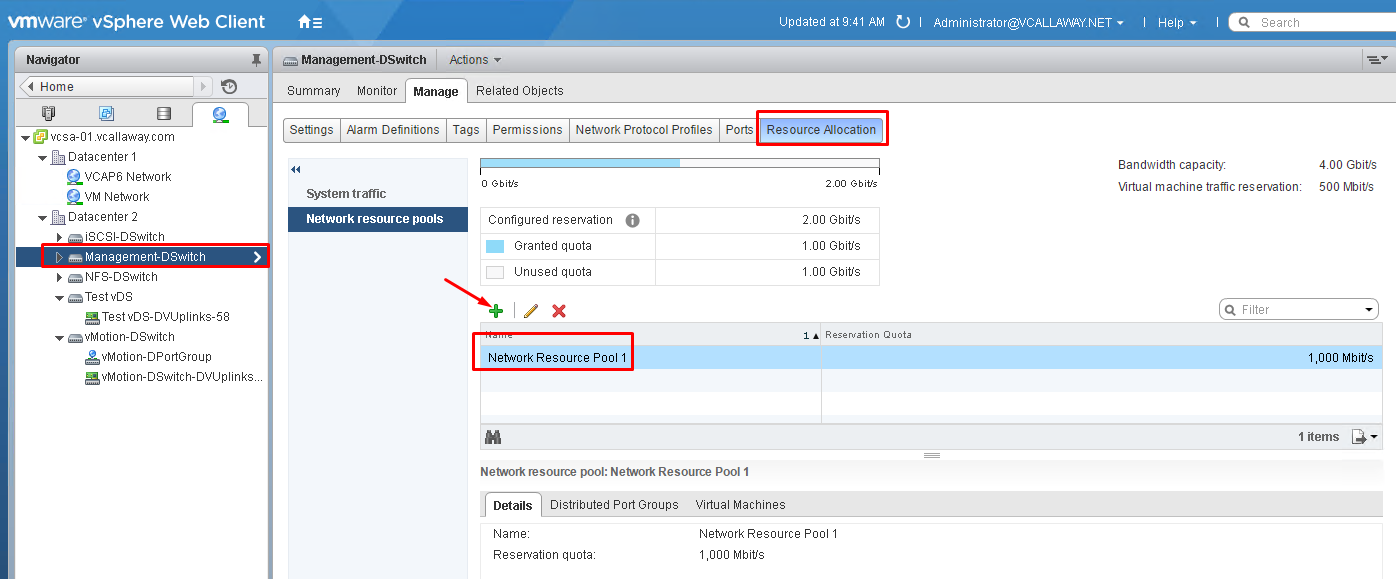

We can allocate bandwidth by applying shares, reservations and limits to the following types of system traffic:

- Management

- Fault Tolerance

- iSCSI

- NFS

- vSAN

- vMotion

- vSphere Replication

- vSphere Data Protection Backups

- Virtual Machines

Note: Not more than 75% of total bandwidth of the physical link can be assigned. That is to prevent over-subscription and preventing human error bottlenecks.

Example – 10Gbps physical link = 7.5Gbps can only be reserved.

Add Network Resource Pool

Determine and Configure vDS Port Bindings Settings According to a Deployment Plan

There are 3 different types of port bindings we can use.

Static Binding – When a VM connects through a port group with static binding the port is immediate assigned and reserved to the VM, guaranteeing connectivity at all times. The port is disconnected only when the VM is removed from the port group. This type of binding is default and is recommended by VMware for general use.

Management Point: vCenter Only

Dynamic Binding – When a VM connect through a port group with a dynamic binding a port is assigned only when the VM is powered on and the NIC is connected. The port is dissconnected when the VM is powered off. This type of binding can be used in the event there are more VM’s that available ports. Dynamic binding is deprecated from ESXi 5.0, but is still an option in the vSphere client.

Management Point: vCenter Only

Ephemeral Binding – When a VM connect through a ephemeral port group the port is created and assigned to the VM by the host when the VM is powered on and the NIC is connected. When the VM is powered off or the NIC is dissconnected the port is deleted.

Management Point: vCenter and the ESXi host. However, the network setting can only be changed on the host when vCenter is down.

Ephemeral Bindings are used in recovery purposes when you want to provision ports directly on the host, bypassing vCenter. Ephemeral port bindings are also used when migrating from a Windows 5.x vCenter to a vSphere 6 appliance.

Note: Performance is slower compared to static bindings due to the add/remove ports operations. Port-level permissions and controls are lost after every power cycle.