Objective 4.2 Topics:

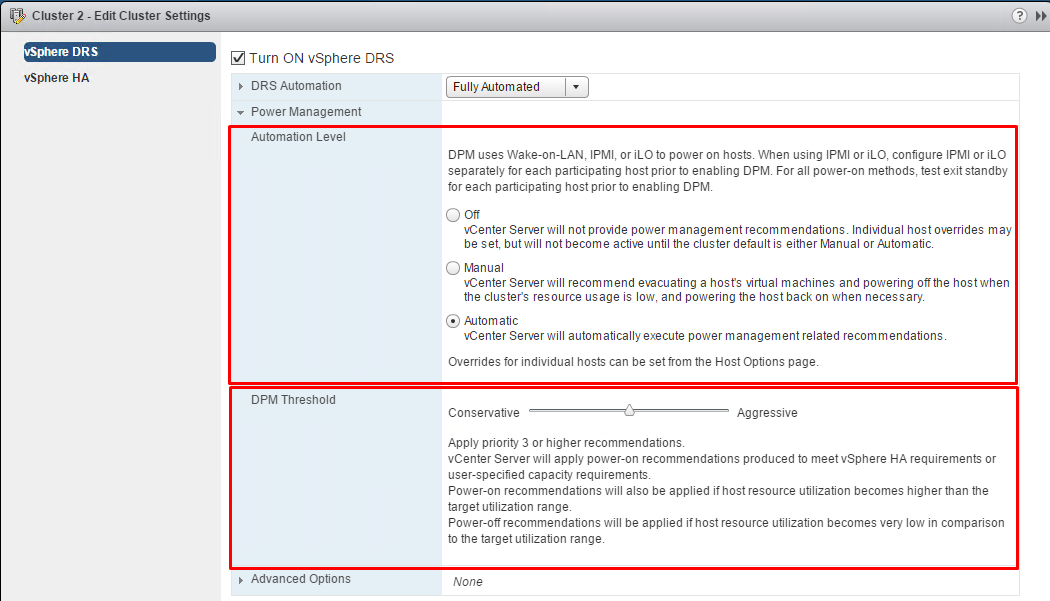

- Configure DPM, including appropriate DPM Thresholds

- Configure/Modify EVC mode on an existing Cluster

- Create DRS and DPM Alarms

- Configure applicable Power Management Settings for ESXi Hosts

- Configure DRS Cluster for Efficient/Optimal Load Distribution

- Properly Apply Virtual Machine Automation Levels based up Application Requirements

- Administer DRS / Storage DRS

- Create DRS / Storage DRS Affinity and Anti-Affinity Rules

- Configure Advanced DRS / Storage DRS Settings

- Create and Manage vMotion / Storage vMotion

- Create and Manage Advanced Resource Pool Configurations

Configure DPM, including Appropriate DPM Thresholds

The vSphere Distributed Power Management (DPM) feature allows a DRS cluster to reduce its power consumption by powering hosts on and off based on cluster resource utilization.

vSphere DPM monitors the cumulative demand of all virtual machines in the cluster for memory and CPU resources and compares this to the total available resource capacity of all hosts in the cluster. If sufficient excess capacity is found, vSphere DPM places one or more hosts in standby mode and powers them off after migrating their virtual machines to other hosts.

vSphere DPM can use one of three power management protocols to bring a host out of standby mode: Intelligent Platform Management Interface (IPMI), Hewlett-Packard Integrated Lights-Out (iLO), or Wake-On-LAN (WOL).

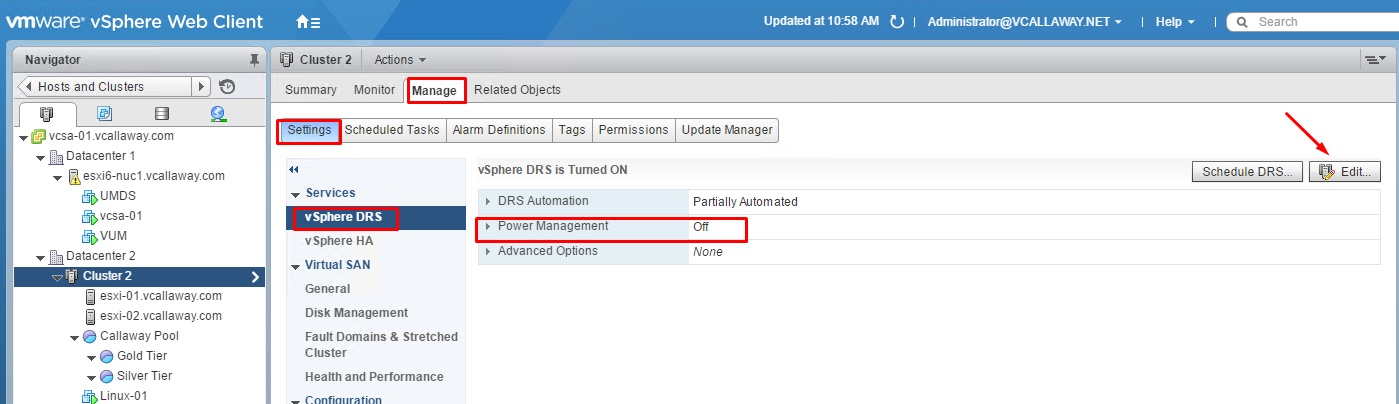

Enabling vSphere DPM for a DRS Cluster

In order for DPM to work, the host must support one of the 3 methods (Wake on LAN, IPMI or iLO).

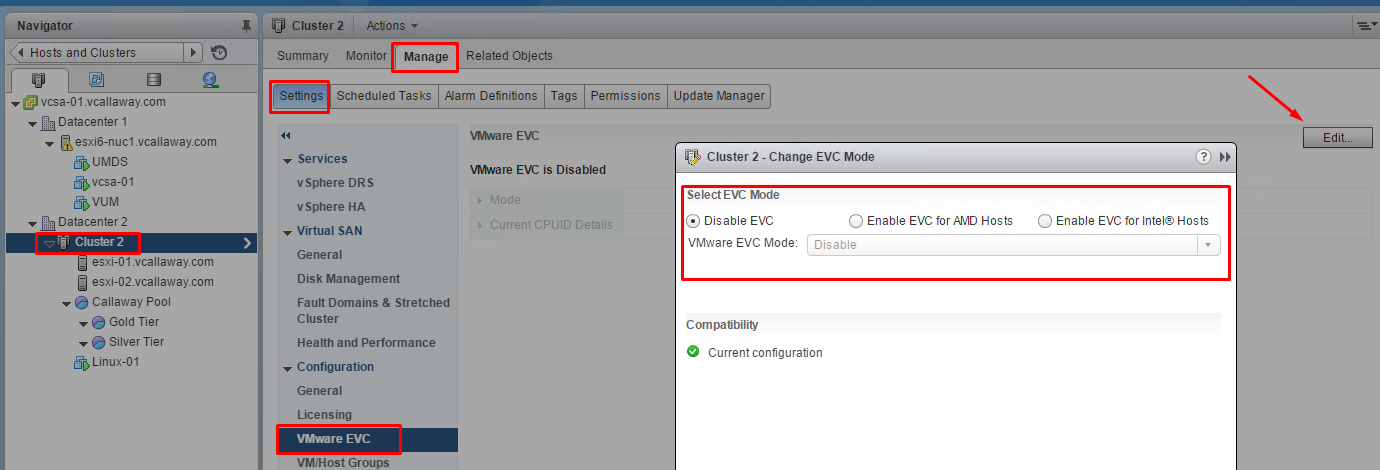

Configure/Modify EVC Mode on an Existing DRS Cluster

Configure EVC to ensure that virtual machine migrations between hosts in the cluster do not fail because of CPU feature incompatibilities.

How EVC works

- If all hosts in a cluster are compatible with a newer EVC mode, the mode can be changed.

- EVC can be enabled for a clust that does not have EVC enabled

- The EVC mode can be raised to expose more CPU features.

- The EVC mode can be lowerd to hide CPU features and increase compatibility.

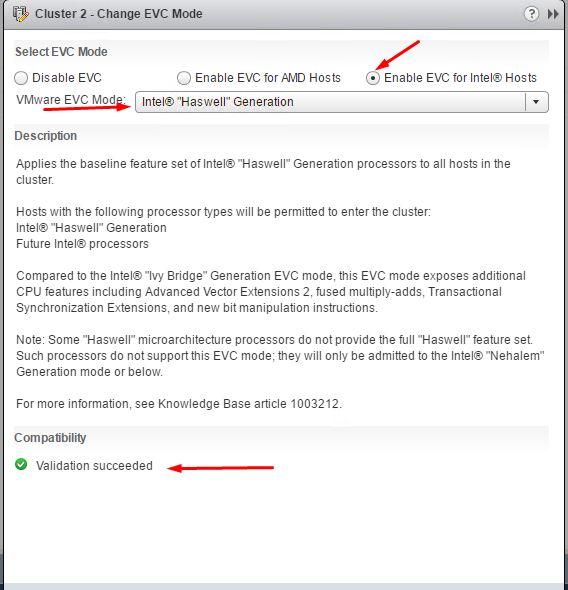

Virtual Machine Impact

Raising EVC Mode – When the EVC level is raised the VM’s can remain powered on. However the VM will be able to take advantage of the new features until they are powered completely off and then powered back on.

Lowering EVC Mode – When the EVC Mode is lowered the VM’s must be powered off, and then powered back on.

I’m actually enabling EVC mode and raising it thus the compatibility is ok. However, I would still need to power off and VM’s that are currently running until I would be able to take advantage of any new features. I selected ‘Haswell’ mode since I’m running on Haswell processor for my nested lab.

Create DRS and DPM Alarms

The vSphere Web Client indicates whether a DRS cluster is valid, overcommitted (yellow), or invalid (red).

DRS can be over committed for the following reasons:

- Host failure

- VM’s are powered on without vCenter being aware (Direct access to host)

- Reservation constraints on a parent resource pool when a VM is trying to fail over.

- Changes made to hosts or VM’s will vCenter is unavailable

Monitoring DPM

Use event-based alarms in vCenter Server to monitor vSphere DPM.

We can look for the following events.

- DrsEnteringStandbyModeEvent

- DrsEnteredStandbyModeEvent

- DrsExitingStandbyModeEvent

- DrsExitedStandbyModeEvent

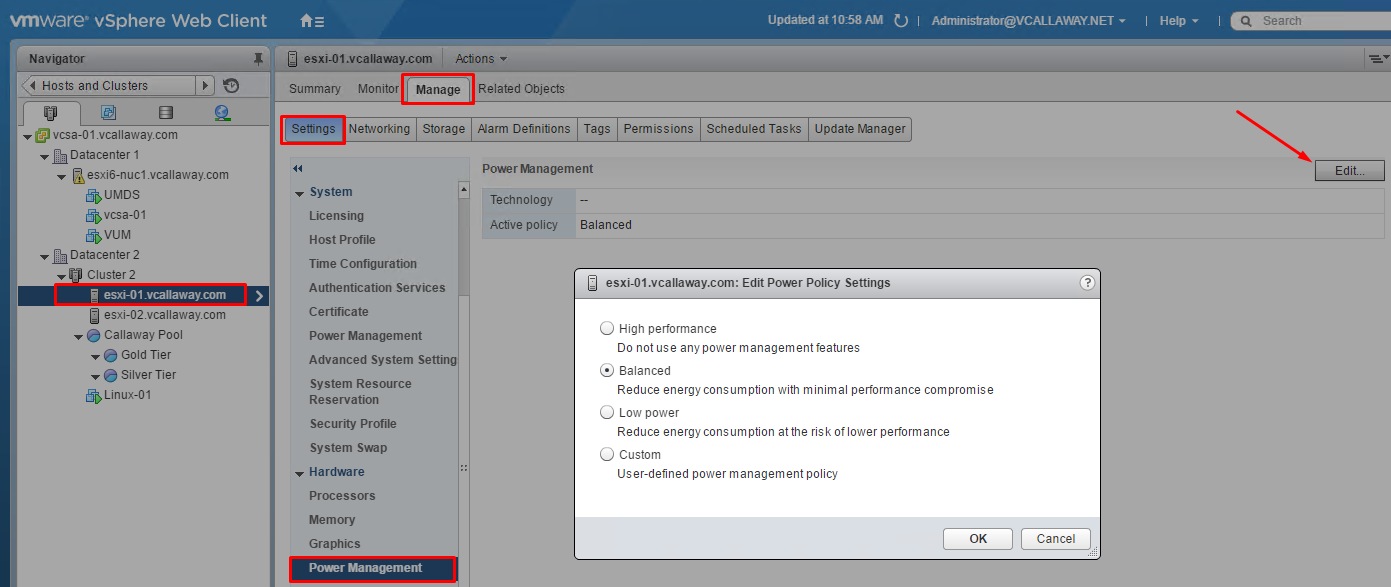

Configure Applicable Power Management Settings for ESXi Hosts

ESXi can take advantage of several power management features that the host hardware provides to adjust the trade-off between performance and power use. You can control how ESXi uses these features by selecting a power management policy.

CPU Power Management Polices

Not Supported – the host does not support Power Management features or Power Management is not enabled in the BIOS. A good example of this is a hosted ESXi host. 😉

High Performance – the host will use the CPU as much as it can when needed unless it’s capped for terminal or power reasons from the BIOS.

Balanced (Default) – The VMkernel uses the available power management features conservatively to reduce the amount of energy is used without hurting performance.

Low Power – The VMkernel aggressively uses power management features to reduce the power consumption at a risk of actually lowering performance

Custom – VMkernel bases it’s power policy on a custom set of advanced configuration perimeters that are set by the admin.

Change Power Management Polices

Configure DRS Cluster for Efficient/Optimal Load Distribution

DRS migration threshold allows you to specify which recommendations are generated and then applied (when the virtual machines involved in the recommendation are in fully automated mode) or shown (if in manual mode). This threshold is also a measure of how much cluster imbalance across host (CPU and memory) loads is acceptable.

There are five settings ranging from Conservative to Aggressive.

Priority level (1-5) for the recommendation. Priority one, the highest, indicates a mandatory move because of a host entering maintenance or standby mode or DRS rule violations. Other priority ratings denote how much the recommendation would improve the cluster’s performance; from priority two (significant improvement) to priority five (slight).

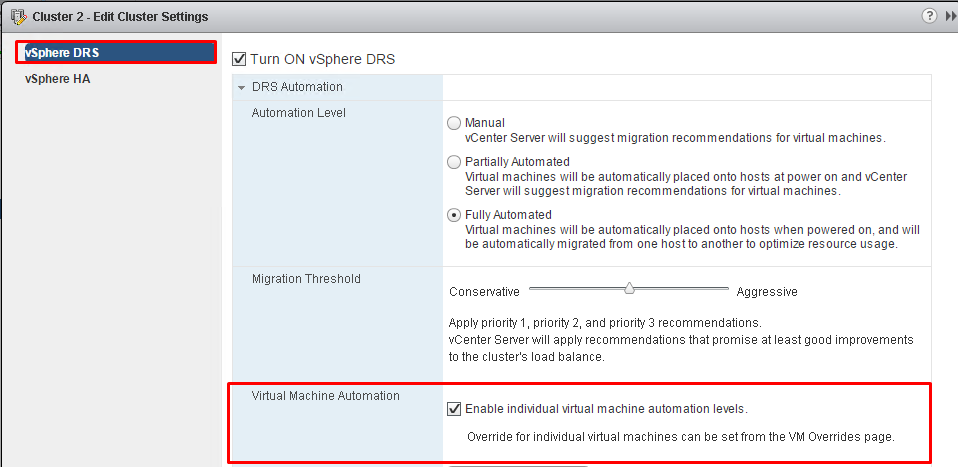

Properly Apply Virtual Machine Automation Levels Based on Application Requirements

Create DRS / Storage DRS Affinity and Anti-Affinity Rules

With DRS affinity rules we can control the placement of virtual machine on hosts within a cluster.

There are 2 types of rules:

VM-Host Affinity Rule – We can make a rule that states a VM must run on a particular host or group of hosts or we can set an anti-affinity rule that states a VM must NOT run on a host or group of hosts.

VM-VM Affinity Rule – We can make a rule that states VM 1 and VM2 must run on the SAME host together. The opposite of that would be an anti-affinity VM-VM rule that states that VM1 and VM2 must run on separate hosts. A good example of this would be domain controllers, SQL/Web servers in a cluster.

To check whether any enabled affinity rules are being violated and cannot be corrected by DRS, select the cluster’s DRS tab and click Faults

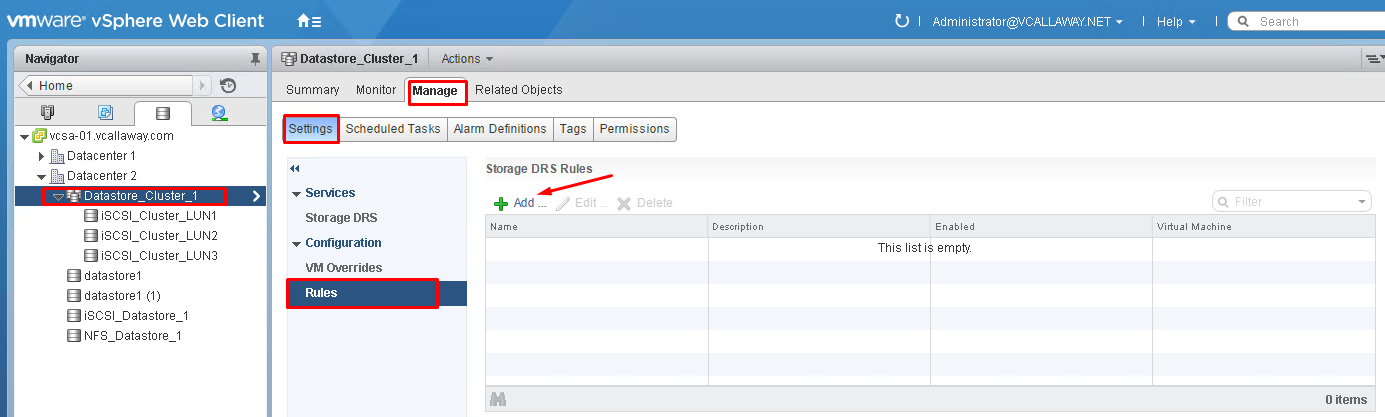

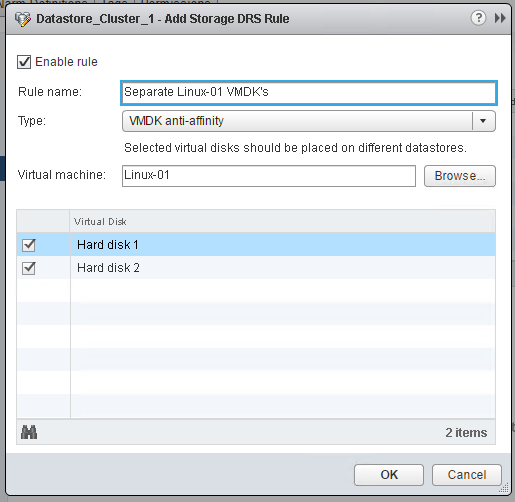

With Storage DRS Anti-Affinity rules we can control which virtual disks should not be placed on the same datastore within a datastore cluster. By default, a VM’s virtual disks are kept together on the same datastore.

Rules –

Inter-VM Anti-Affinity Rules – Specify which virtual machines should NEVER be kept on the same datastore. (VM1’s VMDK’s vs. VM2’s VMDK’s)

Intra-VM Anti-Affinity Rules – Specify which virtual disks associated with a particular virtual machine MUST be kept on different datastores. (VM1’s VMDK 1 vs. VM1’s VMDK2)

Creating Inter-VM Anti-Affinity Rules

Creating Intra-VM Anti-Affinity Rules

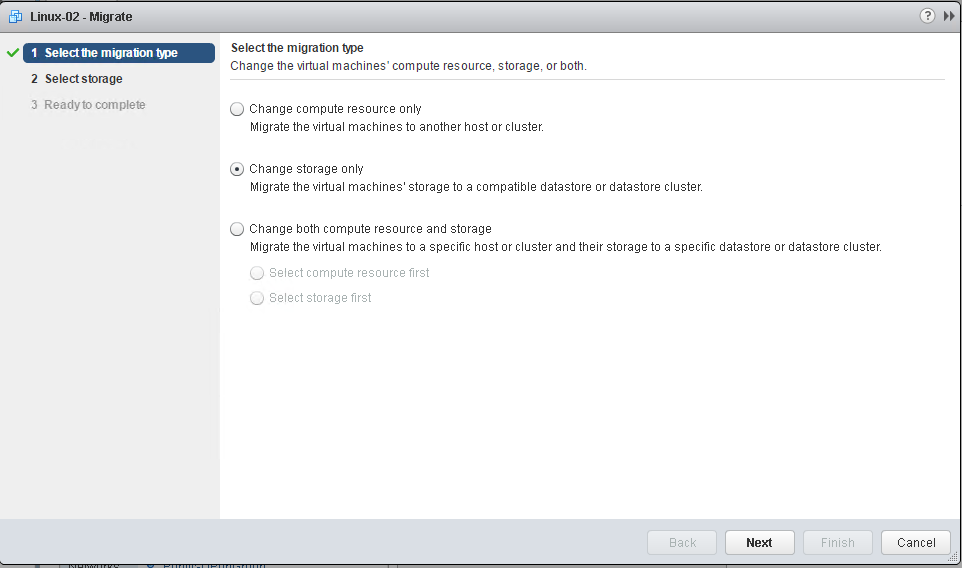

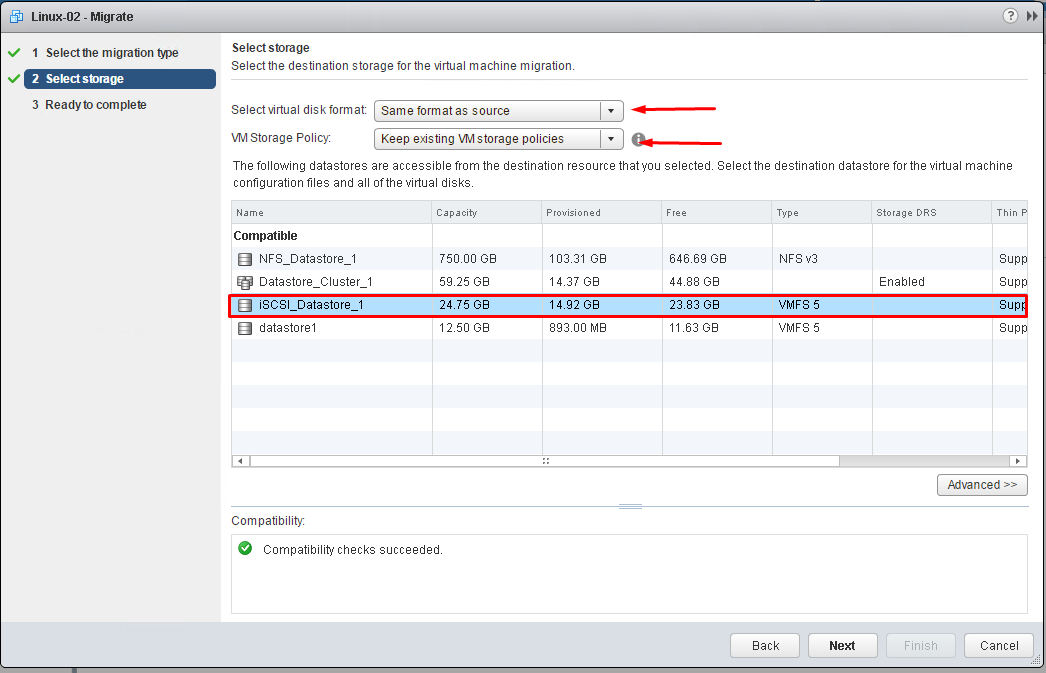

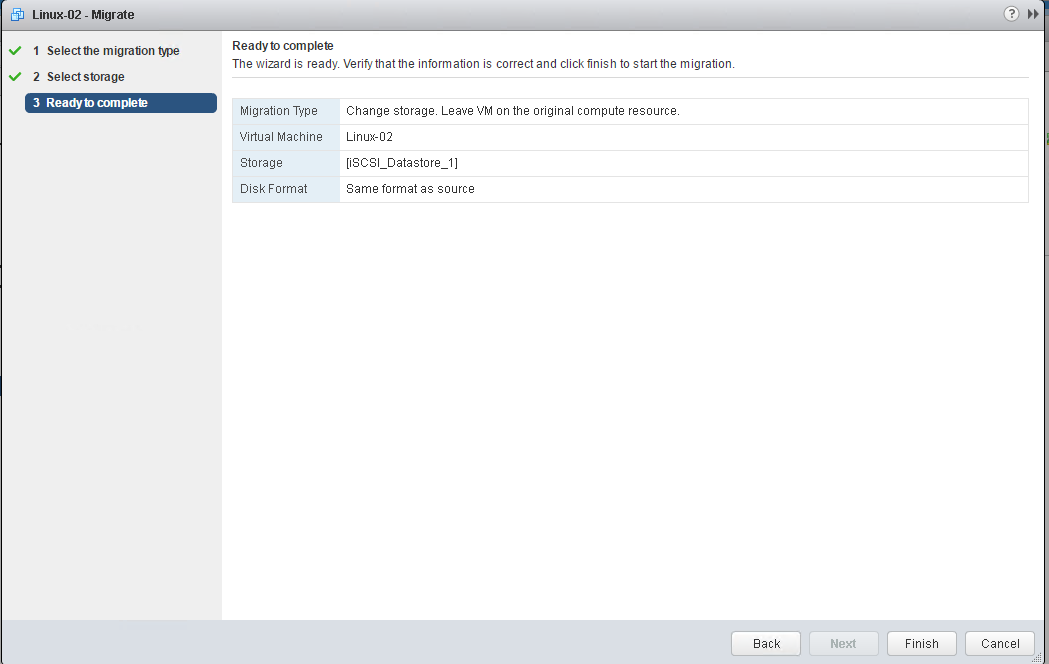

Configure and Manage vMotion /Storage vMotion

Set/Change the disk format if necessary and select the VM storage policy (if applicable).

Create and Manage Advanced Resource Pool Configurations

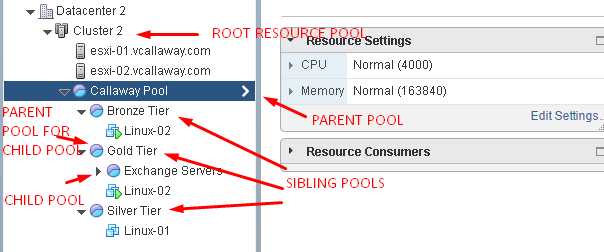

A resource pool is a logical abstraction for flexible management of resources. Resource pools can be grouped into hierarchies and used to hierarchically partition available CPU and memory resources.

A resource pool can contain child resource pools, virtual machines, or both. You can create a hierarchy of shared resources. The resource pools at a higher level are called parent resource pools. Resource pools and virtual machines that are at the same level are called siblings. The cluster itself represents the root resource pool. If you do not create child resource pools, only the root resource pools exist.