Objective 2.3 Topics:

- Analyze and resolve storage multi-pathing and failover issues

- Troubleshoot storage device connectivity

- Analyze and resolve Virtual SAN configuration issues

- Troubleshoot iSCSI connectivity issues

- Analyze and resolve NFS issues

- Troubleshoot RDM issues

Analyze and resolve storage multi-pathing and failover issues

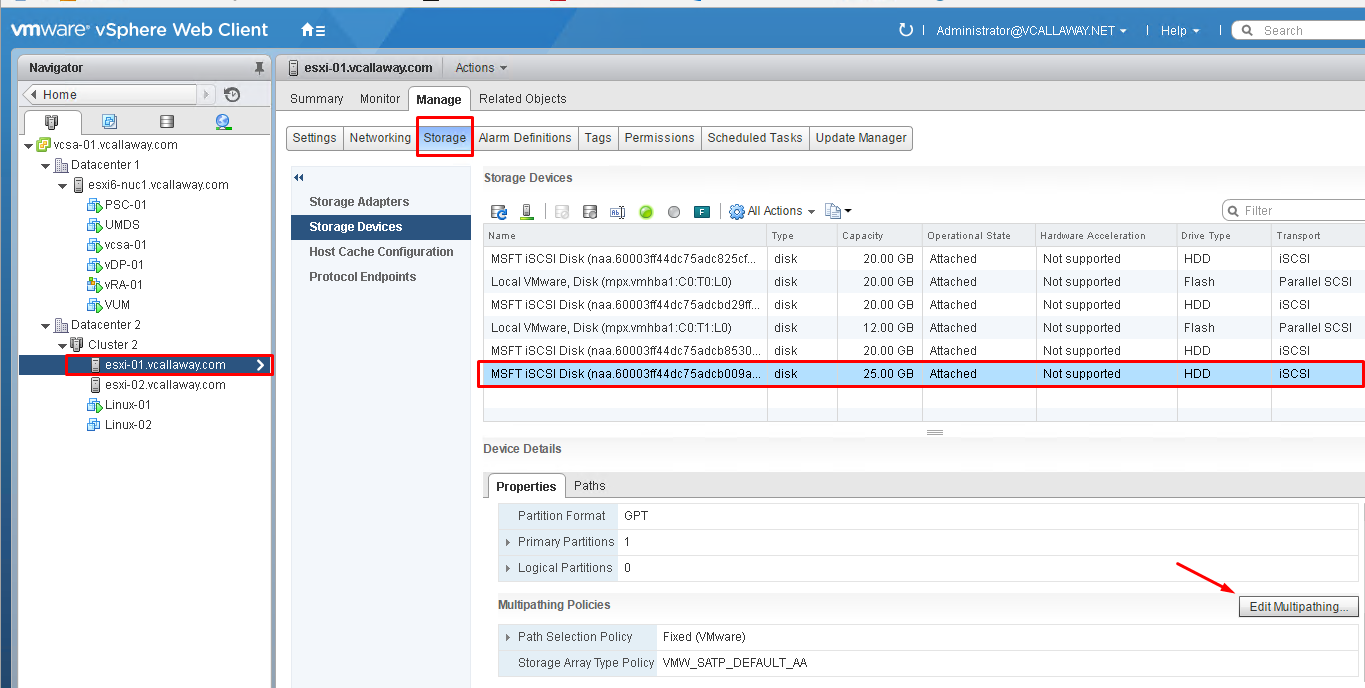

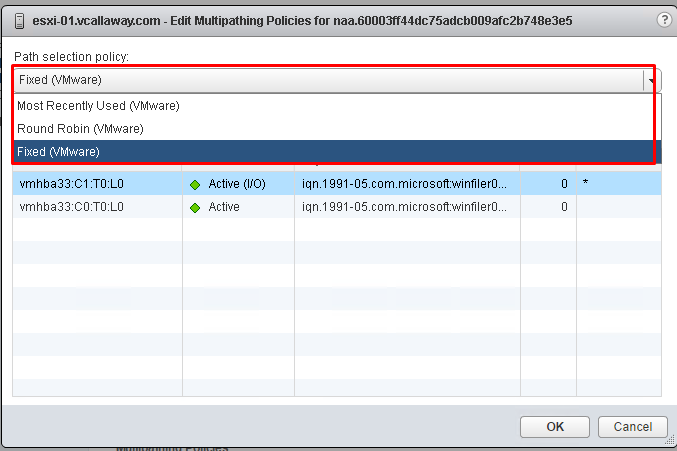

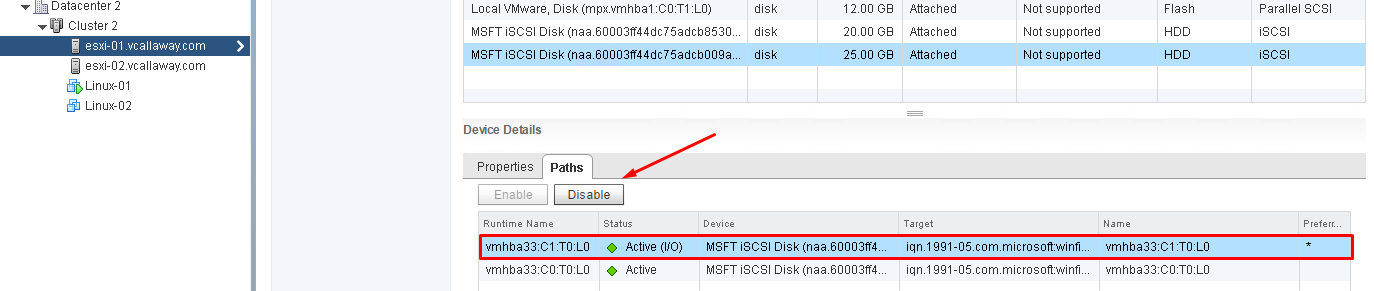

There are 2 methods we can use to change the multi-pathing policy and enable/disable paths

- Command line

- vSphere Web Client

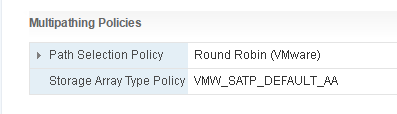

Changing the Multi-Pathing Policy

Web Client

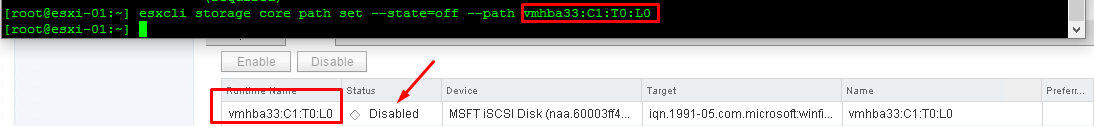

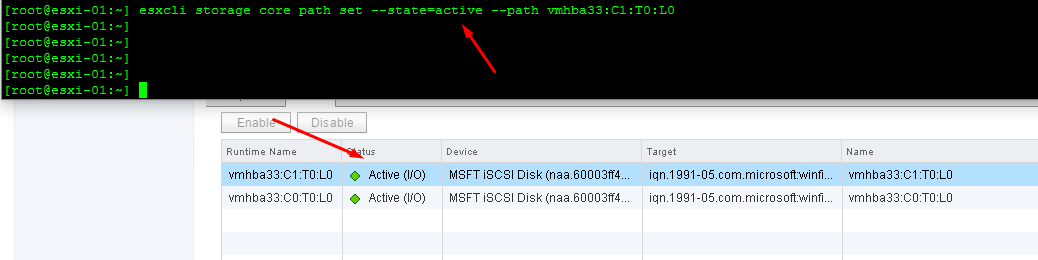

Enabled/Disable a Path

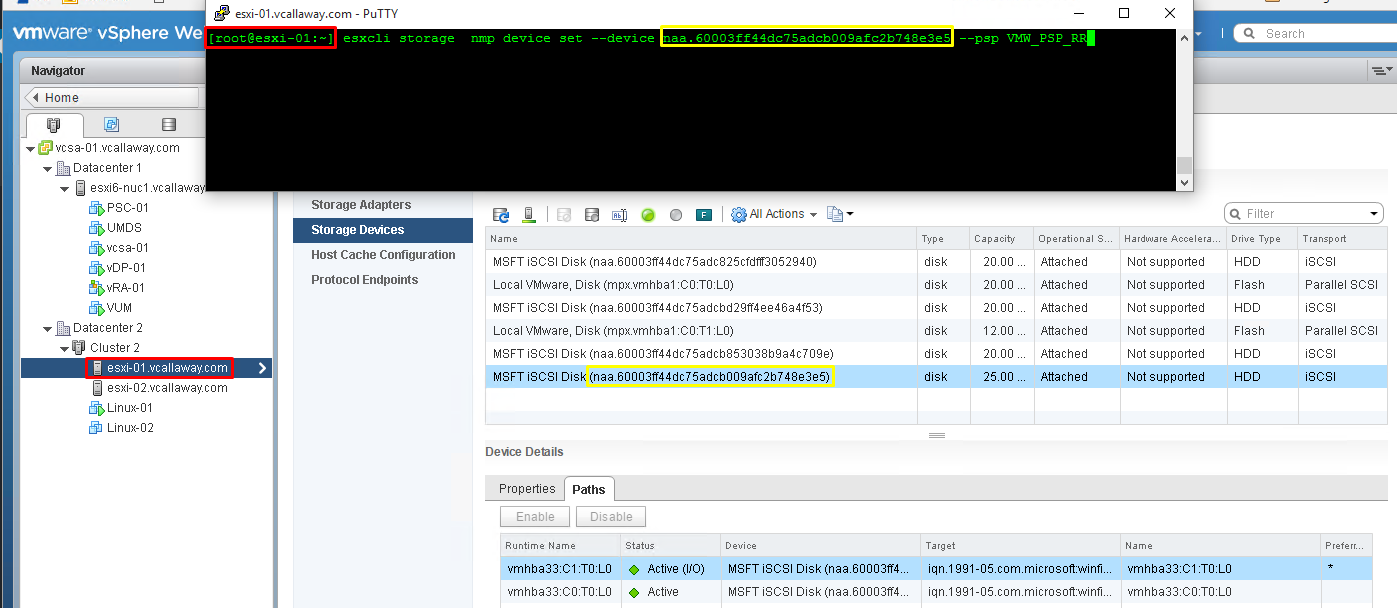

Change Path Policy Command Line:

Command: esxcli storage nmp device set –device naa_id –psp path_policy

For example, if we wanted to change the LUN below from fixed to Round Robin we would do the following.

If we wanted to change it back we could simply just use the command: esxcli storage nmp device set –device naa.60003ff44dc75adcb009afc2b748e3e5 –psp VMW_PSP_FIXED or MRU.

Disable Path Command Line:

We can do the reverse to enable it.

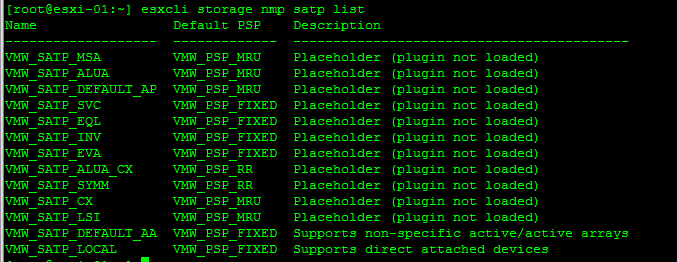

Change the Default Pathing Policy for New/Existing LUNs

Check the existing Policy –

Change the Default Path Policy –

esxcli storage nmp satp set –default-psp=policy –satp=your_satp_name

Analyze and Resolve vSan Configuration Issues

Host with the vSAN Service enabled is not in the vCenter Cluster – Add the host to the vSAN Cluster

Host is in a vSAN enabled cluster but does not have the vSAN Service enabled – verify the network is correct on host and is configured properly.

vSAN network is not configured – Configure vSAN Network on the virtual switchs that will connect the vSAN cluster

Host cannot communicate with all other nodes in the vSAN enabled cluster – check for network isolation.

Troubleshoot iSCSI Connectivity Issues

If connectivity is maintained by the VMware networking stack we can follow the steps below. If not, it’s good to consult the storage vendor for their troubleshooting steps.

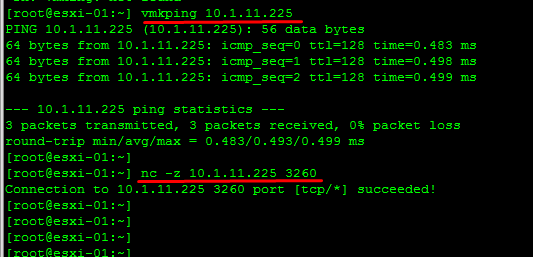

- On effected host we can test network connectivity from the host to the storage array with the vmking target_ip command.

- Check if the port is open on the storage array. nc -z target_ip 3260

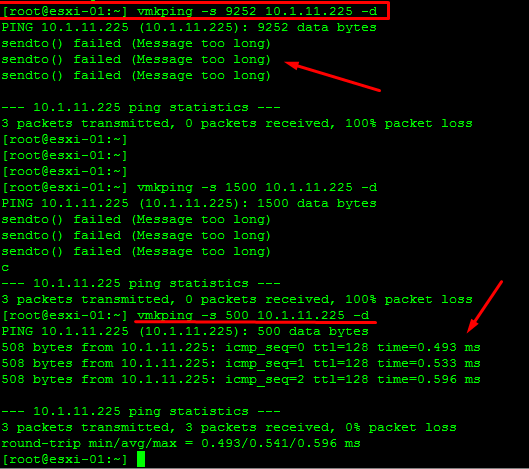

- Test if MTU’s are too small.

If the above checks out in working order then we need to check to see if the storage array have some security restrictions enabled.

These restrictions could:

- IP restrictions

- IQN restrictions

- CHAP restrictions.

We’ll need to make sure check that the volume/lun is online as well.

There are a number of different scenarios and problems that could happen depending on the type of storage you are using. For all types of storage the first place to look would be to check the hardware configuration and connectivity between the host, network and storage. Examine the logs is another great place to help narrow down the root cause of the issue. Log location: /var/log/vmkernel and look for storage related events such as NMP (Native Multipathing Plugin).

Troubleshooting for iSCSI

- Check physical connections

- Test connectivity with ping and vmkping (ping from the vmkernel).

- Correct ports on switch/physical switch configuration (vlans, MTU, switch port enable, IPs, routing)

- Target IPs & ACL’s to luns (could be iqn’s).

- VMkernal bindings

- LUN authentication

Troubleshooting for NFS

- Check physical connections

- Physical switch configurations (vlans, MTU, switch port enable, IPs, routing)

- Test connectivity with ping

- Check IP’s, firewalls, ports

- Permissions on the export/share

Troubleshoot Virtual SAN

- vSAN overall health

- Review logs for the disks, network and physical servers. (vmkernel.log)

- Check network connectivity (nics, switch configuration, IPs, routing)

- Hardware compatibility (firmware/drivers)

- Virtual machine compliance within vSAN

Troubleshoot RDM Issues

- Configure the virtual machine with RDM to ignore the SCSI INQUIRY cache.

- scsix:y.ignoreDeviceInquiryCache = “true” (where x is the SCSI controller number and y is the SCSI target number of the RDM.).